Ronald T. Azuma, Ph.D.

Selected Projects

Light Field displays (Display Week and SIGGRAPH 2020)

Almost all 3D displays today (including VR and AR displays) are stereo displays that cause eyestrain because the viewer cannot focus to different depths. Light field displays can reduce or prevent this eyestrain by enabling the viewer to focus at different depths, simply by fixating on different objects in the 3D scene. We propose and demonstrate a view-dependent approach for light field displays that shows a practical way to implement light field displays with display resolutions available today or in the near future.

To see the paper, presentation, and demo video, please see my Light Field displays project webpage.

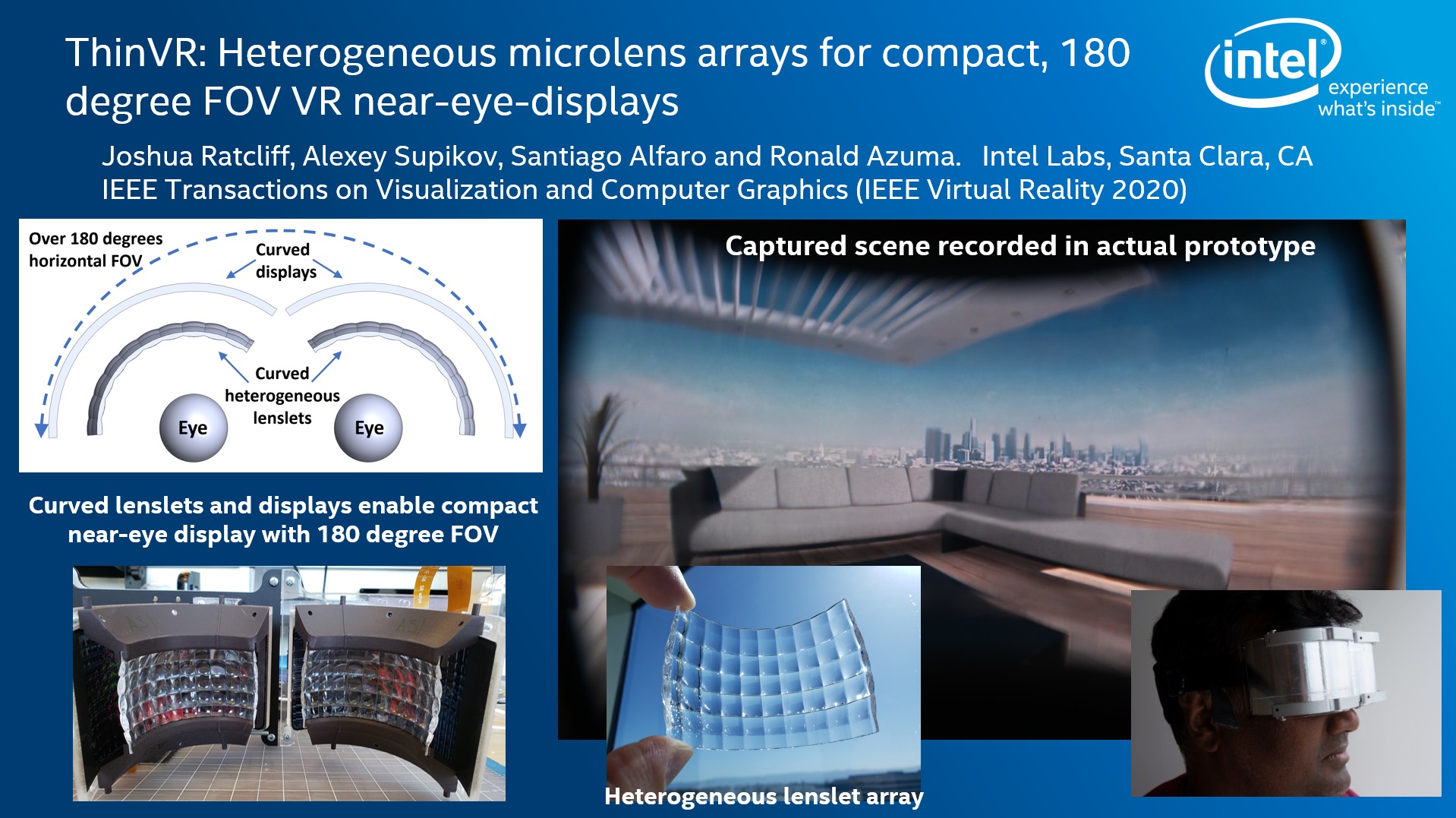

ThinVR (IEEE VR and SIGGRAPH 2020)

VR displays today are bulky and typically limit the field of view (FOV) to around 90 degrees. Increasing the FOV to 180 degrees increases the bulk even further. ThinVR is an approach to simultaneously achieve a 180 degree horizontal FOV and a compact form factor. We achieve this through a computational display approach that uses curved displays combined with custom-designed heterogeneous lenslet arrays. We proved that the core approach works by implementing this idea in prototypes using both static and dynamic displays.

To see the paper, presentation, supplemental videos and images, please see my ThinVR project webpage.

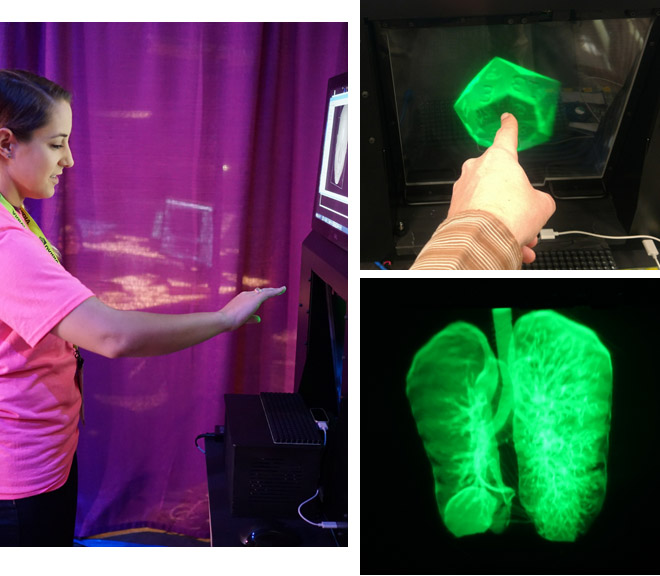

3D Aerial Display (SIGGRAPH 2017)

This is a brief description. For more details, please see my Mid-Air Display at SIGGRAPH 2017 webpage.

At SIGGRAPH 2017, Intel Labs demonstrated the experience of touching a virtual 3D object that looks like a hologram, floating in mid air in front of a display. The viewer does not wear glasses and does not experience the eyestrain commonly associated with stereo displays. As the viewer reaches out to touch the floating 3D object, the system adjusts the rendering so that the fingers cut away parts of the 3D object, and an ultrasonic array triggers tactile sensations on the viewer's fingers.

What the pictures and videos cannot adequately convey is that the floating object really looks like as if it exists in front of the display. All depth cues are correct, and most viewers would believe they were seeing a hologram, although it actually is a volumetric display viewed through an optical re-imaging plate.

The system we demonstrated was built by Seth Hunter and a company called Misty West. My role was champion and director.

SIGGRAPH 2017 Emerging Technologies paper

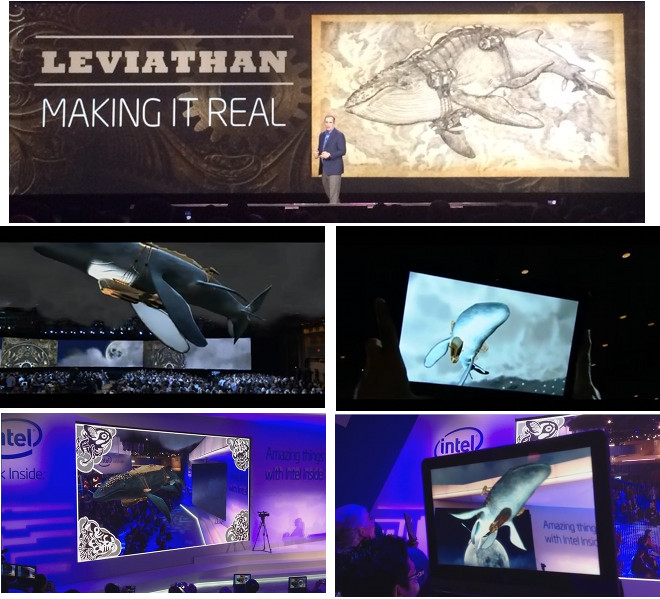

Leviathan at CES 2014

This is a brief description of Leviathan. For many more details, please see my Leviathan at CES 2014 webpage.

At CES 2014, Intel ran three Augmented Reality demonstrations inspired by the steampunk fantasy world of Leviathan, to excite people about the potential for Augmented Reality to enable new forms of media for storytelling. One demo ran as part of the Intel CEO keynote, where we brought the Leviathan to life, flying it over the heads of an audience of 2,500 via Augmented Reality techniques.

I wrote the tracker that provided the AR tablet view of Leviathan in the Intel CEO keynote, and I personally operated the tablet during the keynote. My team built the AR application framework that ran on the tablet. I was the main Intel technical expert advising the development and execution of the three Leviathan demos, and I manned the Intel booth to help run the two booth demos.

Tawny Schlieski champions the Leviathan project within Intel Labs, and she recruited me and my team to help with the technical execution. To make Leviathan a reality, Intel partnered with the Worldbuilding lab in the USC School of Cinematic Arts and engaged the services of Metaio and a production company called Wondros.

Indirect Augmented Reality

Accurate tracking for AR in general outdoor environments is well beyond the current state of the art. But despite that we would like to build compelling outdoor AR experiences that work robustly. Perhaps we should rethink how to generate an AR experience. In traditional AR, the user has a direct view of the real world and we augment that with virtual content. That can make the rendering simple but requires solving a very difficult tracking problem. Instead, what if we render both what the user perceives to be real and virtual? Then we have complete control over what is rendered onto the screen. Registration errors between real and virtual objects no longer occur on the display (instead they occur between the mobile device and the real world). This trades a difficult tracking problem for a difficult rendering problem, but if we use panoramic images of real environments as the means to render the real world, then this Indirect Augmented Reality approach becomes feasible.

We conducted user studies that suggest that, under certain conditions, this new Indirect AR approach provides a more compelling and convincing illusion than traditional AR. 16 out of 18 participants did not even realize that they were not directly looking at the real world

Jason Wither conducted the user study and suggested the term “Indirect Augmented Reality.”

C&G journal paper | Video of traditional AR condition | Video of new Indirect AR condition

Mediated Experiences

Instead of using AR displays to augment a user’s view of the real world, an alternate approach toward augmentation is to embed both the real world and the users with sensors and displays. This approach combines aspects of pervasive and ubiquitous computing, but the goal is to enable new forms of mobile media, hence the name Mediated Experiences. We designed, built, and evaluted a novel system and application that uses the real-time social behaviors of users to both control and drive the content of a new type of game.

Mat Laibowitz led this project and designed and built this system. I wrote the software for two applications that ran on two different parts of the system, and I supervised the team.

IEEE Pervasive Computing paper

The Westwood Experience

The Westwood Experience was a showcase demonstration and research experiment built by the team I led at Nokia Research Center Hollywood. It was a novel location-based experience that connected a narrative to evocative real locations through Mixed Reality and other techniques. The Westwood Experience has its own set of web pages.

In this project, I was deeply involved in the concept development, and the Peet’s and Yamato effects were my ideas. I served as technical director, overseeing everything that ran on the N900, verifying everything and coming up with fallback measures to deal with technical problems. I organized every group that went through the Westwood Experience and personally led the execution of each performance.

Tracking Hollywood Star icons

AR tracking using markers is not new. However, demonstrating that you can track off a class of markers that already exist in the environment and doing it successfully despite very harsh conditions (glare, dynamic shadows, occlusions) is new. We developed this for the star icons on the Hollywood Walk of Fame because at one point we were planning to build an experience located on Hollywood Blvd.

Thommen Korah is the computer vision expert who developed the final algorithms and results.

![]()

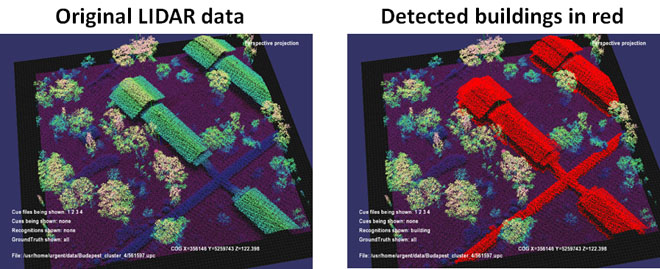

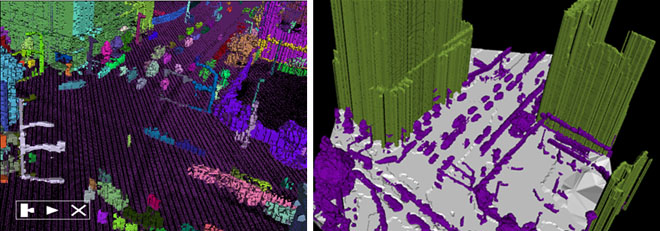

Exploiting LIDAR data

A basic problem with almost all outdoor AR experiences is that they really don’t know much about the real world around the user. They may have a database of a handful of points of interest, but they typically don’t know where the buildings are, let alone other objects like trees, light posts, etc. This limitation greatly constrains visualization techniques and our ability to create a compelling outdoor AR experience.

However, a few companies are now collecting urban databases comprised not just of urban imagery but also 3D points clouds collected from ground and aerial LIDAR sensors. There is vast potential to exploit this data to better understand urban environments for a variety of purposes, including model reconstruction, AR interfaces and experiences, and tracking.

At HRL, I was part of a team on the DARPA URGENT project that researched ways to automatically segment and classify objects from LIDAR data (i.e. identifying objects as buildings, cars, trees, etc.). I am not a computer vision expert, so I built the software architecture and visualization tools. At Nokia, we had access to Navteq ground-based LIDAR data and were developing a variety of capabilities based on such data. There, my role was manager and champion.

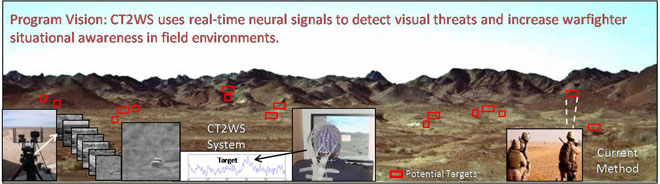

CT2WS

I did not work on the CT2WS (Cognitive Technology Threat Warning System) project, but I am including this here because I played a key role in something equally important: winning the contract funding for that project! (HRL also won subsequent additional funding after I left.) I designed and built visualizations and scripted interactive demonstrations to explain the concept that became part of our proposal and helped explain the system and approach to DARPA program managers.

I’m not allowed to show images of my visualizations but the project is described at a DARPA web page and DARPA has released project images, shown below.

Pre-launch detection of RPGs

At HRL, I took up a challenge to address an old problem facing the US military: the threat from RPGs (rocket-propelled grenades). Although I knew almost nothing about this topic, I did research and worked with other people in HRL to devise a new approach to this problem: detecting these threats before the launch phase. And that’s about all I can say. (Sorry, no pictures!) This project was an example of my flexibility, where I researched a new topic and served as the catalyst and instigator to develop a solution that was outside my areas of technical expertise. I was successful in getting contract funding for this new approach.

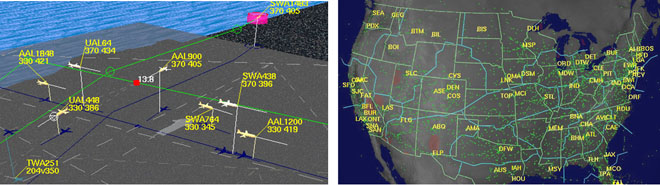

Air Traffic Visualization

I designed, implemented, and demonstrated a variety of applications, systems and interfaces for visualizing air traffic. These include visualizations of conflict detection and resolution in Free Flight, a prototype AR display for an ATC ground traffic controller, non-geographic visualization modes for National Airspace System data, an experiment in using autostereoscopic displays for ATC, and visualizations and interfaces for closely-spaced parallel approaches.

HFES2005 paper | AIAA2005 paper | CGA2000 paper | Vis1999 paper | AIAA1996 paper

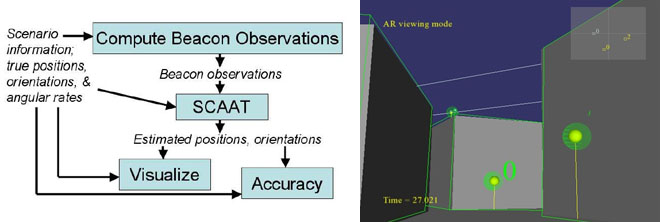

AR tracking concept based on a few mobile beacons

Enabling AR for dismounted soldiers is a difficult task: few assumptions can be made about the environment. I designed and evaluated a concept for an AR tracking system that relied upon adding a small number of beacons to the environment, in combination with soldier-worn sensors. Some of these beacons would be carried by small unmanned air vehicles. I implemented this concept in simulation and determined rules of thumb for achieving “1 in 100” accuracy specified by our military subject matter expert.

I took great care in designing and running this simulation. For example, I procured the SCAAT tracking code used by Greg Welch in the HiBall tracker to ensure the tracking algorithm was correct, I verified that perfect results occured with perfect sensor input and implemented visualization tools to verify computed intermediate results, and I recorded realistic orientation motion and processed those to generate orientation and angular rate data that matched perfectly. The random number generator was the Mersenne twister.

I performed all phases of this project, from helping to acquire the funding, designing and writing the simulation and visualization code, collecting and processing the motion data, performing the Monte Carlo analysis, and writing the paper.

The first video link listed below shows an external view of one simulation run, where a soldier walks through a MOUT site. The soldier's computed position is shown as a colored disk under the soldier. The soldier's field of view is rendered, and when beacons are spotted they are drawn in yellow. The second video shows the same simulation run from a first-person perspective. Buildings and beacons have virtual green outlines rendered over the soldier's view of the real world, showing how accurate or inaccurate the tracking is at that point in the simulation.

ISMAR2006 paper | Video of simulation (external view) | Video of simulation (first person view)

Motion-stabilized outdoor AR tracking

At HRL, I built the first motion-stabilized outdoor AR tracking system. This was not the first outdoor AR system, but it was probably the first to show good registration in an outdoor environment. Although this work dates back to 1998, it demonstrated more accurate registration than what AR browsers on mobile phones demonstrated using GPS and compass sensors, partially because we had better sensors but also due to careful system design, calibration and sensor fusion. USC worked with us to correct orientation errors via template matching. I won the funding from DARPA, served as Principal Investigator, designed the technical approaches and supervised the team that built the system.

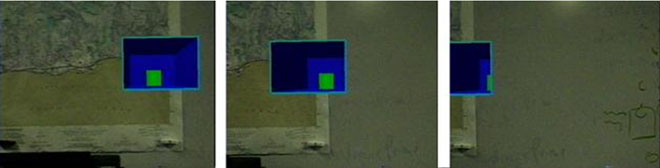

Perceptual problems in Augmented Reality

Augmenting a real-world scene with virtual content has the potential to cause optical illusions and perceptual problems. We investigated a simple task: draw a virtual object that is behind a real wall. I worked with an experimental scientist to conduct a user study investigating different visualization techniques. We found that people tended to perceive the virtual object to be either on the surface of the wall or in front of the wall, even when rendered with a surrounding virtual cut-away box and strong motion-parallax cues.

This paper did not answer what visualization methods would overcome this problem and make such information be perceived correctly in an intuitive manner. However this was one of the very first papers to identify and demonstrate that this is a real problem, and many subsequent papers by other people have worked on this problem.

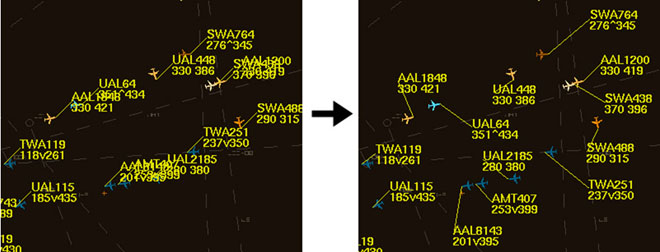

Automatic label placement

A basic problem in augmenting an object with a label is that the virtual label may cover other labels or other objects that you don't want covered. In real-time applications, automatic label placement can be desirable. It is an NP-complete problem though, so no perfect solutions exist for real-time applications. I designed, implemented and evaluted a new heuristic based on identifying clusters of labels. This was first implemented for an air traffic control visualization, and subsequently I applied it to an AR application. I worked with an experimental scientist to evaluate this new heuristic against other real-time heuristics and an ideal non-real-time solution (simulated annealing).

Analysis of Augmented Reality

I’m known for defining the term “Augmented Reality” but what is important is not the definition per se but rather that it was part of a detailed analysis I did of the field when it was just getting started. This identified the characteristics of AR and many initial research problems. This helped guide the early development of the field. According to a study done by Mark Billinghurst, my original survey is the single most cited work in the field of AR.

Scalable optoelectronic head tracker

We built the first demonstrated 6D tracking system for head-mounted displays that was scalable to any room size. It mounted upward-looking photodiode sensors onto an HMD and provided a large array of infrared LEDs in the ceiling above the user. The LEDs were in standard 2 foot by 2 foot ceiling panels that fit into many ceiling infrastructures. To cover a wider area, we could simply add more panels. The system controlled which LEDs to illuminate. Only one LED was turned on at any instant, and the system intelligently turned only the LEDs that the photodiodes were observing, taking advantage of coherence. This system was demonstrated at SIGGRAPH 1991.

This took a team of people to build. My roles were the development, simulation, coding and verification of the algorithm that took observations of LEDs and computed the 6D head location from that, the design of the overall software architecture, photodiode sensor calibration, and general system operation.

A descendent of this system became a commercial product: the HiBall tracker sold by 3rdTech.

I3D paper | SPIE paper | Video (MP4 format, 27 MB)

![]()

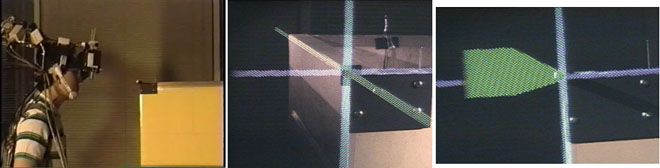

First working Augmented Reality system (demonstration of accurate registration)

For my dissertation, I built the first Augmented Reality system that actually worked (i.e. actually had alignment between 3D real and virtual objects accurate enough so that the typical user perceived the objects to coexist in the same space). There were previous AR systems but they had very large registration errors. For this work, I focused solely on the registration problem and the tracking, calibration and system work needed to achieve this with an optical see-through head-mounted display. Instead of a complex application, the only thing the system accomplished was putting a red/green/blue virtual 3D axis on one corner of a real wooden crate. I developed a hybrid tracking system that fused inertial measurements with an optoelectronic 6D tracker so that the registration remained accurate even during rapid head motion. This required me to develop and implement head-motion prediction to compensate for the system latency. The system was published in SIGGRAPH 1994 and I also had a SIGGRAPH 1995 paper analyzing head-motion prediction and latency.

SIGGRAPH 94 paper | SIGGRAPH 95 paper | Dissertation | Video (MP4 format, 47 MB)